Your new post is loading...

Your new post is loading...

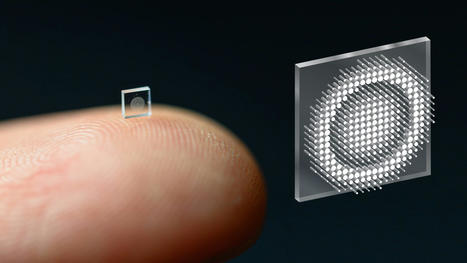

Micro-sized cameras have great potential to spot problems in the human body and enable sensing for super-small robots, but past approaches captured fuzzy, distorted images with limited fields of view. Now, researchers at Princeton University and the University of Washington have overcome these obstacles with an ultracompact camera the size of a coarse grain of salt. The new system can produce crisp, full-color images on par with a conventional compound camera lens 500,000 times larger in volume, the researchers reported in a paper published Nov. 29 in Nature Communications. Enabled by a joint design of the camera’s hardware and computational processing, the system could enable minimally invasive endoscopy with medical robots to diagnose and treat diseases, and improve imaging for other robots with size and weight constraints. Arrays of thousands of such cameras could be used for full-scene sensing, turning surfaces into cameras. While a traditional camera uses a series of curved glass or plastic lenses to bend light rays into focus, the new optical system relies on a technology called a metasurface, which can be produced much like a computer chip. Just half a millimeter wide, the metasurface is studded with 1.6 million cylindrical posts, each roughly the size of the human immunodeficiency virus (HIV). Each post has a unique geometry, and functions like an optical antenna. Varying the design of each post is necessary to correctly shape the entire optical wavefront. With the help of machine learning-based algorithms, the posts’ interactions with light combine to produce the highest-quality images and widest field of view for a full-color metasurface camera developed to date.

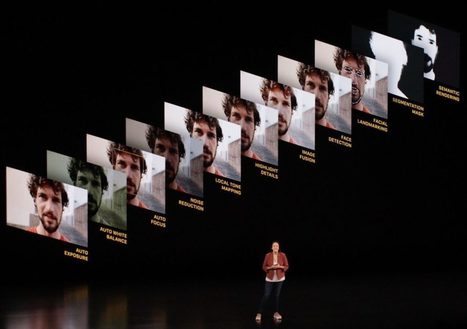

Capturing light is becoming a thing of the past : with computational photography it is now eclipsed by processing pixels.

iPhone 11 and its « Deep Fusion » mode leave no doubt that photography is now software and that software is also eating cameras.

Light is the fastest thing in the universe, so trying to catch it on the move is necessarily something of a challenge. We’ve had some success, but a new rig built by Caltech scientists pulls down a mind-boggling 10 trillion frames per second, meaning it can capture light as it travels along — and they have plans to make it a hundred times faster. Understanding how light moves is fundamental to many fields, so it isn’t just idle curiosity driving the efforts of Jinyang Liang and his colleagues — not that there’d be anything wrong with that either. But there are potential applications in physics, engineering, and medicine that depend heavily on the behavior of light at scales so small, and so short, that they are at the very limit of what can be measured. You may have heard about billion- and trillion-FPS cameras in the past, but those were likely “streak cameras” that do a bit of cheating to achieve those numbers. A light pulse as captured by the T-CUP system. If a pulse of light can be replicated perfectly, then you could send one every millisecond but offset the camera’s capture time by an even smaller fraction, like a handful of femtoseconds (a billion times shorter). You’d capture one pulse when it was here, the next one when it was a little further, the next one when it was even further, and so on. The end result is a movie that’s indistinguishable in many ways from if you’d captured that first pulse at high speed. This is highly effective — but you can’t always count on being able to produce a pulse of light a million times the exact same way. Perhaps you need to see what happens when it passes through a carefully engineered laser-etched lens that will be altered by the first pulse that strikes it. In cases like that, you need to capture that first pulse in real time — which means recording images not just with femtosecond precision, but only femtoseconds apart. That’s what the T-CUP method does. It combines a streak camera with a second static camera and a data collection method used in tomography. “We knew that by using only a femtosecond streak camera, the image quality would be limited. So to improve this, we added another camera that acquires a static image. Combined with the image acquired by the femtosecond streak camera, we can use what is called a Radon transformation to obtain high-quality images while recording ten trillion frames per second,” explained co-author of the study Lihong Wang. That clears things right up! At any rate the method allows for images — well, technically spatiotemporal datacubes — to be captured just 100 femtoseconds apart. That’s ten trillion per second, or it would be if they wanted to run it for that long, but there’s no storage array fast enough to write ten trillion datacubes per second to. So they can only keep it running for a handful of frames in a row for now — 25 during the experiment you see visualized here.

Those 25 frames show a femtosecond-long laser pulse passing through a beam splitter — note how at this scale the time it takes for the light to pass through the lens itself is nontrivial. You have to take this stuff into account!

Multiple sources tell us that Google is acquiring Lytro, the imaging startup that began as a ground-breaking camera company for consumers before pivoting to use its depth-data, light-field technology in VR.

Emails to several investors in Lytro have received either no response, or no comment. Multiple emails to Google and Lytro also have had no response.

But we have heard from several others connected either to the deal or the companies.

One source described the deal as an “asset sale” with Lytro going for no more than $40 million. Another source said the price was even lower: $25 million and that it was shopped around — to Facebook, according to one source; and possibly to Apple, according to another. A separate person told us that not all employees are coming over with the company’s technology: some have already received severance and parted ways with the company, and others have simply left.

Assets would presumably also include Lytro’s 59 patents related to light-field and other digital imaging technology.

The sale would be far from a big win for Lytro and its backers. The startup has raised just over $200 million in funding and was valued at around $360 million after its last round in 2017, according to data from PitchBook. Its long list of investors include Andreessen Horowitz, Foxconn, GSV, Greylock, NEA, Qualcomm Ventures and many more. Rick Osterloh, SVP of hardware at Google, sits on Lytro’s board.

A pricetag of $40 million is not quite the exit that was envisioned for the company when it first launched its camera concept, and in the words of investor Ben Horowitz, “blew my brains to bits.”

If ever there was a sport that required rapid fire photography, Formula One racing is it. Which makes what photographer Joshua Paul does even more fascinating, because instead of using top-of-the-range cameras to capture the fast-paced sport, Paul chooses to take his shots using a 104-year-old Graflex 4×5 view camera.The photographer clearly has an incredible eye for detail, because unlike modern cameras that can take as many as 20 frames per second, his 1913 Graflex can only take 20 pictures in total. Because of this, every shot he takes has to be carefully thought about first, and this is clearly evident in this beautiful series of photographs.

Intel inked a deal to acquire Mobileye, which the chipmaker’s chief Brian Krzanich said enables it to “accelerate the future of autonomous driving with improved performance in a cloud-to-car solution at a lower cost for automakers”. Mobileye offers technology covering computer vision and machine learning, data analysis, localisation and mapping for advanced driver assistance systems and autonomous driving. The deal is said to fit with Intel’s strategy to “invest in data-intensive market opportunities that build on the company’s strengths in computing and connectivity from the cloud, through the network, to the device”. A combined Intel and Mobileye automated driving unit will be based in Israel and headed by Amnon Shashua, co-founder, chairman and CTO of the acquired company. This, Intel said, “will support both companies’ existing production programmes and build upon relationships with car makers, suppliers and semiconductor partners to develop advanced driving assist, highly-autonomous and fully autonomous driving programmes”.

Photography startup Light has launched L16, which the company's calling a "a multi-aperture computational camera," at the Code/Mobile conference. It's named L16, because it's equipped with 16 individual lenses, though unlike bulky and heavy DSLRs, Recode says it's just about the size of a Nexus 6 that's double the thickness. When you take a picture using the camera, all 16 lenses capture photos simultaneously at different focal lengths in order to "capture more data in every shot." Light's technology then combines all of them into a single 52-megapixel image -- you can adjust the photo's depth of field, focus and exposure after it's been captured.

The L16 runs on Android and has built-in WiFi, allowing you to post pictures directly from the device. It comes with an integrated 35mm-150mm optical zoom and a five-inch touchscreen display. If you think you'll be able to save money switching to this from an entry-level or mid-range DSLR, though, you're sadly mistaken. It might not cost as much as high-end cameras, but it'll still set you back $1,299 when you pre-order from today until November 6th from Light's website. When it starts shipping in the summer of 2016, you'll have to shell out $1,699 to get one.

With the Illum, Lytro is targeting a more specialized market. In addition to the 8X (30 – 250mm) zoom lens, it has a constant f/2.0 aperture, 1/4000 shutter, and a four-inch backside touchscreen display. According to the company, the new sensor can capture 40 million light rays (Lytro doesn’t list megapixels) to the original’s 11 million. Its desktop processing software works with traditional products, like Adobe’s Photoshop and Apple’s Lightroom. Photographers can use the camera’s software to refocus pictures after the fact, generate 3-D images, adjust the depth of field, and create tilt shifts. It will be available in July for $1,500. Beyond its lens and bigger sensor, there are other ways the Illum surpasses Lytro’s original model. For example, because it’s such a different concept than most photographers are accustomed to, the camera has built-in software that color codes the display with depth information. It effectively previews the depth range you’ll have to work with once you shoot a photo. This, Lytro says, is to help photographers start to think in three dimensions. Afterwards, photographers can export the images to traditional formats, or thanks to WebGL, publish them online in ways that let people interact with them and manipulate them later.

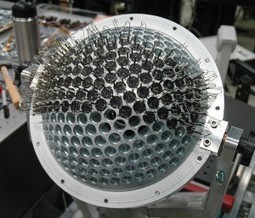

Array camera start-up Pelican Imaging Corp. has closed a $20 million Series C round of funding bringing the total invested in the company to more than $37 million since its formation in 2008. New investors include Qualcomm Ventures and Nokia Growth Partners who have joined existing investors Globespan Capital Partners, Granite Ventures, InterWest Partners and In-Q-tel. Pelican Imaging has developed an array sensor architecture together with algorithms and software for image reconstruction, gesture recognition and other functions. Instead of using a single, high-resolution but expensive CMOS image sensor, the array camera averages multiple images to produce a single, enhanced-resolution image. According to CTO Kartik Venkataraman, the principal advantages are reduced z height, superior performance under low light conditions, and the ability to offer 3D functions and gesture recognition. Venkataraman cofounded Pelican Imaging in 2008 with then CEO Aman Jabbi.

To me, photographic gear is like gourmet food. I see cameras as the entrées, lenses and other equipment are the side dishes and wines. While compact cameras are the sweet desserts. Sometimes, the d...

Via kris phan, Gary Pageau

Samsung Electronics says it is “creating a brand new type of device” with its Galaxy Camera, “for those who wish to shoot, edit and share high quality photographs and video easily and spontaneously from anywhere, at any time.”

As a camera, this is pretty strong contender: The EK-GC100 Galaxy Camera has a 16-megapixel, 1/2.33-inch BSI CMOS sensor. The 21x lens zooms from 23-480mm, with a f/2.8 – 5.9 aperture.

A new tiny camera captures better-than-HD video. The latest device from Point Grey is an “in an ice-cube sized, low-cost package,” says the British Columbia-base industrial camera maker.

The FL3-U3-88S2C captures 4,096 x 2,160 video with an 8.8 megapixel Sony IMX1221 Exmor R sensor. “The impressive 4K2K resolution combined with the ease of USB 3.0 and the camera’s small size makes the new Flea3 suitable for a variety of high resolution color applications including automatic optical inspection, ophthalmology, interactive multimedia, and broadcast,” the company says.

The Flea3 camera measures 29 x 29 x 30mm, and uses USB 3 connectivity. It is $945.

|

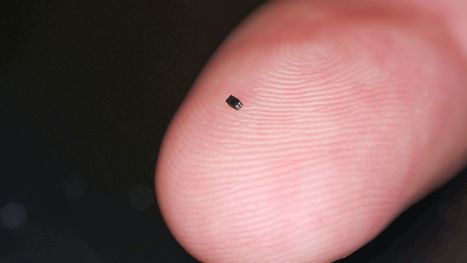

A specialist medical camera that measures just 0.65 x 0.65 x 1.158mm has just entered the Guinness Book of Records. The size of the grain of sand, it is the camera's tiny sensor that is actually being entered into the record book. The OmniVision OV6948 is just 0.575 x 0.575 x 0.232mm and produces a 40,000-pixel color image using an RGB Bayer back-side-illuminated chip. Each photosite measures just 1.75 µm across. The resolution may seem low, but the OVM6948-RALA camera is designed to fit down the smallest of veins in the human body giving surgeons views that will aid diagnosis and with surgical procedures. Previously the surgeon would carry out these operations blind, or use a much lower-resolution fibre optic feed. Manufactured by California-based OmniVision Technologies Inc, the sensor captures its imagery at 30fps, and its analog output can be transmitted over distances of up to 4m with minimal noise. The camera unit offers a 120° super-wide angle of view - so something like a 14mm on a full-frame camera. It gives a depth of field range from 3mm to 30mm.

Just hours after Oppo revealed the world’s first under-display camera, Xiaomi has hit back with its own take on the new technology. Xiaomi president Lin Bin has posted a video to Weibo (later re-posted to Twitter) of the Xiaomi Mi 9 with a front facing camera concealed entirely behind the phone’s screen. That means the new version of the handset has no need for any notches, hole-punches, or even pop-up selfie cameras alongside its OLED display. It’s not entirely clear how Xiaomi’s new technology works. The Phone Talks notes that Xiaomi recently filed for a patent that appears to cover similar functionality, which uses two alternately-driven display portions to allow light to pass through to the camera sensor.

Lots of random sources seem to think some upcoming iteration of the iPhone X will feature three rear-facing cameras, either this year or next. So here we are: I’m telling you about these rumors, although I’m not convinced this is going to happen.

The most recent report comes from The Korea Herald, which claims that both Samsung’s Galaxy S10 and a new iPhone X Plus will feature three camera lenses. It isn’t clear what the third sensor would do exactly for Apple. Huawei incorporated three cameras into its P20 Pro — a 40-megapixel main camera, a 20-megapixel monochrome camera, and an 8-megapixel telephoto camera. Most outlets seem to think Apple’s third lens would be used for an enhanced zoom.

Earlier rumors came from the Taiwanese publication Economic Daily News and an investors note seen by CNET. Both of those reports indicated a 2019 release date for the phone. All of these rumors seem underdeveloped at the moment and of course, even if Apple is testing a three-camera setup, the team could always change its mind and stick with the dual cameras. Still, if Apple wants to make an obvious hardware change to its phone cameras, a third lens would be one way to do it.

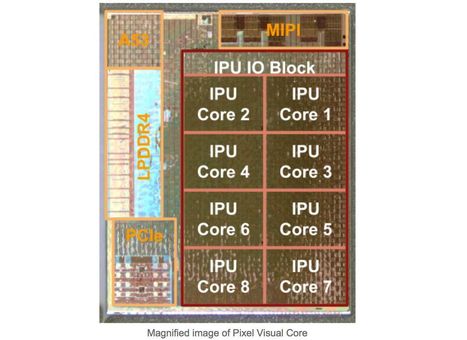

One thing that Google left unannounced during its Pixel 2 launch event on October 4th is being revealed today: it’s called the Pixel Visual Core, and it is Google’s first custom system-on-a-chip (SOC) for consumer products. You can think of it as a very scaled-down and simplified, purpose-built version of Qualcomm’s Snapdragon, Samsung’s Exynos, or Apple’s A series chips. The purpose in this case? Accelerating the HDR+ camera magic that makes Pixel photos so uniquely superior to everything else on the mobile market. Google plans to use the Pixel Visual Core to make image processing on its smartphones much smoother and faster, but not only that, the Mountain View also plans to use it to open up HDR+ to third-party camera apps. The coolest aspects of the Pixel Visual Core might be that it’s already in Google’s devices. The Pixel 2 and Pixel 2 XL both have it built in, but laying dormant until activation at some point “over the coming months.” It’s highly likely that Google didn’t have time to finish optimizing the implementation of its brand-new hardware, so instead of yanking it out of the new Pixels, it decided to ship the phones as they are and then flip the Visual Core activation switch when the software becomes ready. In that way, it’s a rather delightful bonus for new Pixel buyers. The Pixel 2 devices are already much faster at processing HDR shots than the original Pixel, and when the Pixel Visual Core is live, they’ll be faster and more efficient.

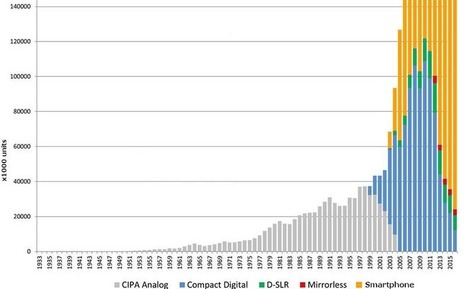

Last month, the Camera & Imaging Products Association (CIPA) released its 2016 report detailing yearly trends in camera shipments. Using that data, photographer Sven Skafisk has created a graph that makes it easy to visualize the data, namely the major growth in smartphone sales over the past few years and the apparent impact it has had on dedicated camera sales. The chart shows smartphone sales achieving a big spike around 2010, the same time range in which dedicated camera sales reached its peak. Each following year has represented substantial growth in smartphone sales and significant decreases in dedicated camera sales, particularly in the compact digital cameras category. Per the CIPA report, total digital camera shipments last year fell by 31.7% over the previous year. The report cites multiple factors affecting digital camera sales overall, with smartphones proving the biggest factor affecting the sales of digital cameras with built-in lenses. The Association's 2017 outlook includes a forecast that compact digital cameras will see another 16.7-percent year-on-year sales decrease this year. Skafisk's graph below shows the massive divide between smartphone sales and camera sales - be prepared to do some scrolling.

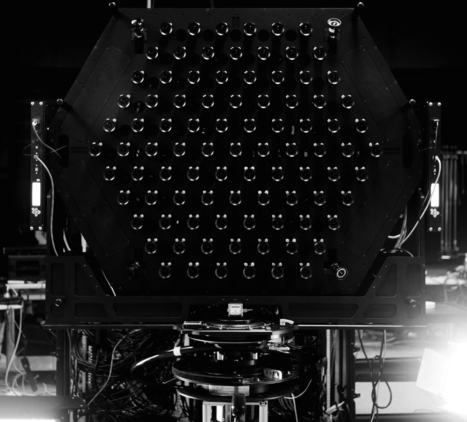

Ever-shifting camera tech company Lytro has raised major cash to continue development and deployment of its cinema-level camera systems. Perhaps the company’s core technology, “light field photography” that captures rich depth data, will be put to better use there than it was in the ill-fated consumer offerings. “We believe we have the opportunity to be the company that defines the production pipeline, technologies and quality standards for an entire next generation of content,” wrote CEO Jason Rosenthal in a blog post. Just what constitutes that next generation is rather up in the air right now, but Lytro feels sure that 360-degree 3D video will be a major part of it. That’s the reason it created its Immerge capture system — and then totally re-engineered it from a spherical lens setup to a planar one. .../... The $60M round was led by Blue Pool Capital, with participation from EDBI, Foxconn, Huayi Brothers and Barry Sternlicht. “We believe that Asia in general and China in particular represent hugely important markets for VR and cinematic content over the next five years,” Rosenthal said in a statement. It’s a hell of a lot of money, more even than the $50M round the company raised to develop its original consumer camera — which flopped. Its Illum follow-up camera, aimed at more serious photographers, also flopped. Both were innovative technologically but expensive and their use cases questionable.

Now that Nokia is out of the smartphone game, many of its key staff are undoubtedly considering their options. Senior Lumia engineer Ari Partinen, who Nokia calls "(our) own camera expert" has just made his choice, and it's not Microsoft. He tweeted that he'll be "starting a new chapter in Cupertino," then confirmed that his new boss is indeed Tim Cook.

Researchers at MIT Media Lab have developed a $500 "nano-camera" that can operate at the speed of light. According to the researchers, potential applications of the 3D camera include collision-avoidance, gesture-recognition, medical imaging, motion-tracking and interactive gaming.

The team which developed the inexpensive "nano-camera" comprises Ramesh Raskar, Achuta Kadambi, Refael Whyte, Ayush Bhandari, and Christopher Barsi at MIT, and Adrian Dorrington and Lee Streeter from the University of Waikato in New Zealand. The nano-camera uses the "Time of Flight" method to measure scenes, a method also used by Microsoft for its new Kinect sensor that ships with the Xbox One. With this Time of Flight, the location of objects is calculated by how long it takes for transmitted light to reflect off a surface and return to the sensor. However, unlike conventional Time of Flight cameras, the new camera will produce accurate measurements even in fog or rain, and can also correctly locate translucent objects.

As you know, Lytro’s new-fangled camera takes shots with depth: you can alter the point of focus, and subtly shift the point of view.

Unless you have the camera however, you can’t have a “light field” shot of your own… until now. For a few days, selected shots — even old treasured images — will be “Lytro-ized.”

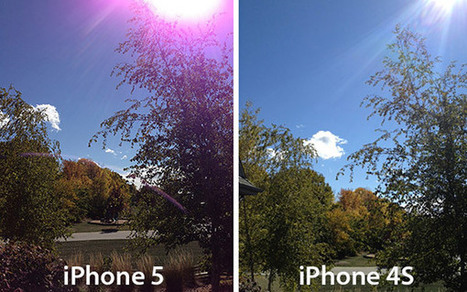

Over the past few days, iPhone 5 users have been reporting a "purple haze" prevalent in photos.

The issue is officially described as, "a purplish or other colored flare, haze, or spot is imaged from out-of-scene bright light sources during still image or video capture."

Apple does not officially acknowledge that the issue is specific to the iPhone 5, instead it suggests that users reposition their camera when taking a photo.

The Leica APO-Telyt-R 1:5.6/1600mm, pictured above, is a massive telephoto lens that dwarfs any Leica camera that you attach to it. It’s the company’s longest, largest, and heaviest lens. It was produced as a custom order by one of the world’s wealthiest photography-enthusiasts, Qatari prince Saud bin Muhammed Al Thani, who paid a whopping $2,064,500 for the hefty piece of glass.

"The camera’s resolution is five times better than 20/20 human vision over a 120 degree horizontal field” and 50 degrees vertical, the university reports. .../... The prototype takes about 18 seconds to shoot a black-and-white frame and record the data.

|

Your new post is loading...

Your new post is loading...

Camera design is increasingly relying on software processing as the lens blends into a chip.